Serving a machine learning model via API

About the Plumer package

In oder to serve our API we will make use of the great Plumer package in R

To read more about this package go to:

https://www.rplumber.io/docs/

Setup

Load in some packages.

If you are going to host the api on a suse or redhat linux server make sure you have all the dependencies as well as the packages installed to follow through this example yourself.

library(EightyR)

load_toolbox()

load_pkg(c("plumber","caret"))In order to serve an api you need to store all the functions that you want to serve inside a R script.

Hello world example

As our “hello world” example we create a script called hello_api.R:

#hello_api.R

#* @get /mean

normalMean <- function(samples=10){

data <- rnorm(samples)

mean(data)

}

#* @post /sum

addTwo <- function(a, b){

as.numeric(a) + as.numeric(b)

}Notice that we use the special comments #* to denote the type of api request and also the name of the request.

We can spin up this api on a specified local port using the following R code in another script run_hello_api.r:

#!/usr/bin/Rscript

# run_hello_api.r

library(EightyR)

load_toolbox()

load_pkg("plumber")

r <- plumb("hello_api.R") # Where 'plumber.R' is the location of the file shown above

r$run(host = "0.0.0.0", port=8000)Remember to give this file execution rights if you want to run it using ./, you can do this using the command chmod

We will run this process in a shell using the following bash code:

./run_hello_api.r

Now that this process is running we can make some requests:

curl "http://127.0.0.1:8000/mean"[-0.2278]

Providing a parameter using ?var= syntax:

curl "http://127.0.0.1:8000/mean?samples=10000"[0.007]

Making a request using "a=&b=" syntax:

curl --data "a=4&b=3" "http://127.0.0.1:8000/sum"[7]

Using JSON syntax:

curl --data '{"a":4, "b":5}' http://127.0.0.1:8000/sum[9]

Make sure to close the job when you are done testing the api!

Hosting the api

So now that we know how to use the plumer package let’s get our hands dirty…

Create a basic model

For our practical example we will build a basic caret rergession model and service it via an api so that someone can request a prediction by giving properly named inputs for the model.

Let’s predict how long an animal will sleep given some descriptors of said animal

What the data looks like:

example_data <-

ggplot2::msleep %>%

select(sleep_total,vore,bodywt,brainwt,awake) %>%

na.omit()

example_data## # A tibble: 51 x 5

## sleep_total vore bodywt brainwt awake

## <dbl> <chr> <dbl> <dbl> <dbl>

## 1 17 omni 0.48 0.0155 7

## 2 14.9 omni 0.019 0.00029 9.1

## 3 4 herbi 600 0.423 20

## 4 10.1 carni 14 0.07 13.9

## 5 3 herbi 14.8 0.0982 21

## 6 5.3 herbi 33.5 0.115 18.7

## 7 9.4 herbi 0.728 0.0055 14.6

## 8 12.5 herbi 0.42 0.0064 11.5

## 9 10.3 omni 0.06 0.001 13.7

## 10 8.3 omni 1 0.0066 15.7

## # ... with 41 more rowsConstruct a naive model

example_model <-

caret::train(form = sleep_total~., data = example_data,method = "rf")

# example_model %>% saveRDS("data/example_model.rds")

example_model## Random Forest

##

## 51 samples

## 4 predictor

##

## No pre-processing

## Resampling: Bootstrapped (25 reps)

## Summary of sample sizes: 51, 51, 51, 51, 51, 51, ...

## Resampling results across tuning parameters:

##

## mtry RMSE Rsquared MAE

## 2 1.7612212 0.8756261 1.3182399

## 4 1.0041945 0.9582245 0.7173262

## 6 0.6508145 0.9789417 0.4539108

##

## RMSE was used to select the optimal model using the smallest value.

## The final value used for the model was mtry = 6.Simple enough to train on this data and without any tuning we can assume a margin of error less than 1/2 an hour…

Create the api

OK, so we need to first define the plumber.R file that we will serve.

Notice that we can define the template very verbosely so that we can both leverage the GET protocol to feedback some characteristics and to populate the HTML page should a user inspect the api to learn how to use it.

This was based on the awesome post by blogger shirin:

https://shirinsplayground.netlify.com/2018/01/plumber/

# script name:

# plumber.R

# set API title and description to show up in curl "http://127.0.0.1:8000/mean"/__swagger__/

#' @apiTitle Run predictions for the total hours of sleep of an animal

#' @apiDescription This API takes various animal's data on factors related to sleep and predicts how long the animal will sleep on its next cycle.

#' indicates hours of sleep

# load model

# this path would have to be adapted if you would deploy this

# load("/Users/shiringlander/Documents/Github/shirinsplayground/data/model_rf.RData")

readRDS("/usr/etc/learning_api_in_R/data/example_model.rds")

#' Log system time, request method and HTTP user agent of the incoming request

#' @filter logger

function(req){

cat("System time:", as.character(Sys.time()), "\n",

"Request method:", req$REQUEST_METHOD, req$PATH_INFO, "\n",

"HTTP user agent:", req$HTTP_USER_AGENT, "@", req$REMOTE_ADDR, "\n")

plumber::forward()

}

# core function follows below:

# define parameters with type and description

# name endpoint

# return output as html/text

# specify 200 (okay) return

#' predict Chronic Kidney Disease of test case with Random Forest model

#' @param vore Character variable. Options are : carni, herbi, insecti, omni.

#' @param bodywt:numeric Numeric variable. Weight in lb of the animal.

#' @param brainwt:numeric Numeric variable. Weight in lb of the animal.

#' @param awake:numeric Numeric variable. Time animal has been awake.

#' @post /predict

#' @html

#' @response 200 Returns the hour(s) prediction from the Random Forest model predicting hours of next sleep

calculate_prediction <- function(vore,bodywt,brainwt,awake) {

# make data frame from parameters

input_data <<- data.frame(vore,bodywt,brainwt,awake,

stringsAsFactors = FALSE)

input_data <-

input_data %>%

mutate(

vore = vore %>% as.numeric(),

bodywt = bodywt %>% as.numeric(),

brainwt = brainwt %>% as.numeric(),

awake = awake %>% as.numeric()

)

# and make sure they really are the right format

if(all(input_data["vore"] %>% class != "character")){

res$status <- 400

res$body <- "Parameters have to be in the right format. vore - character"

}

if( all(input_data[c("bodywt","brainwt","awake")] %>% class != "numeric") ){

res$status <- 400

res$body <- "Parameters have to be in the right format. vore - character"

}

# validation for parameter

if (any(is.na(input_data))) {

res$status <- 400

res$body <- "Parameters have to be numeric or integers - NA's intriduced by coercion"

}

# predict and return result

pred_rf <<- predict(example_model, input_data)

paste("----------------\nNext sleep cycle predicted to be", as.character(pred_rf), "\n----------------\n")

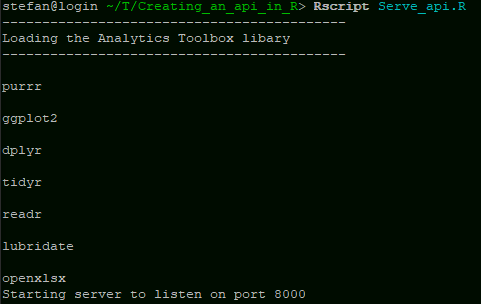

}Setup executable script for the server to execute:

Let’s call this Serve_api.R

#!/usr/bin/env R

library(EightyR)

load_toolbox()

load_pkg("plumber")

r <- plumb("plumber.R")

r$run(host = "0.0.0.0", port=8000)OK, so up to now we have setup all the files we need to serve this api. Now we can ssh into a server and clone this repo over there.

Remember to change the file paths in the script to suite your new machine or cd into the repository before running the script.

For this experiment I quickly initiaited an AWS EC2 instance that we can serve the api with.

Note that with AWS linux you need to make sure all the dependancies are installed.

SSH into the server

In any shell:

ssh -i "path/{My_Private_Key}.pem" ec2-user@ec2{My_IP}.eu-west-1.compute.amazonaws.comClone the repo with your code:

git clone origin https://github.com/lurd4862/Testing_apis_R.gitChange the paths referencing your model…

Cd into the repository…

Run the process in a shell:

Rscript Serve_api.RYou can background this process with a noup command so that the api does not stop when you leave this shell.

Now that the api is running on the port we specified in the run script we can access it:

request the following test case using a json input

curl -H "Content-Type: application/json" -X GET -d '{"vore":"carni", "bodywt":85, "brainwt":0.325, "awake":17.8}' http://127.0.0.1:8000/predict

Next sleep cycle predicted to be 10.9981933333333

curl -H “Content-Type: application/json” -X GET -d ‘{“vore”:“carni”, “bodywt”:85, “brainwt”:0.325, “awake”:17.8}’ ec2-34-242-232-117.eu-west-1.compute.amazonaws.com/predict

ec2-34-242-232-117.eu-west-1.compute.amazonaws.com/predict?vore=“carni”?bodywt=85?brainwt=0.325?awake=17.8